Cisco chips in; GitHub puts Agents in Actions

Today on Product Saturday: Cisco unveils a new networking chip for massive AI clusters, GitHub automates software-development busywork, and the quote of the week.

Generative AI apps could help software designers break out of a decades-old rut when it comes to how we use their tools. At the very least, they need to rethink their approach to avoid frustrating users.

For everything else that's changed over the last several decades, most people still interact with the applications they use at work and in their personal lives by pointing, clicking, and typing. It's been nearly 20 years since the launch of the iPhone forced designers and software developers to reorient their priorities around its smaller screen and limited computing power, and desktop applications are based on even older design principles.

However, generative AI technology is now forcing application developers to rethink the way users interact with their apps. There has long been a subset of apps and workflows that can be controlled with voice commands, but generative AI apps open up new opportunities to move beyond the taskbar or the mobile hamburger menu.

"I think user interfaces are largely going to go away," former Google CEO Eric Schmidt said on the Moonshots podcast last month. "Why do I have to be stuck in what is called the WIMP interface — windows, icons, menus, and pull downs — that was invented in Xerox Park, right, 50 years ago? Why am I still stuck in that paradigm?"

Generative AI app users will need to interact with something, but in Schmidt's view, they'll be able to create their own custom interface simply by giving the system clear instructions about how to present the information they seek or task they need completed to them when it's done processing. And for more complex apps built around one or more agents, managing the activity of those agents will require a new way of thinking about UI design.

"My experience of a platform or a software is going to be very different from your experience of that platform or software," said Amol Ajgaonkar, CTO at Insight, a solutions integrator helping companies build generative AI apps. "In the future, there won't be one standard way everybody experiences a certain software."

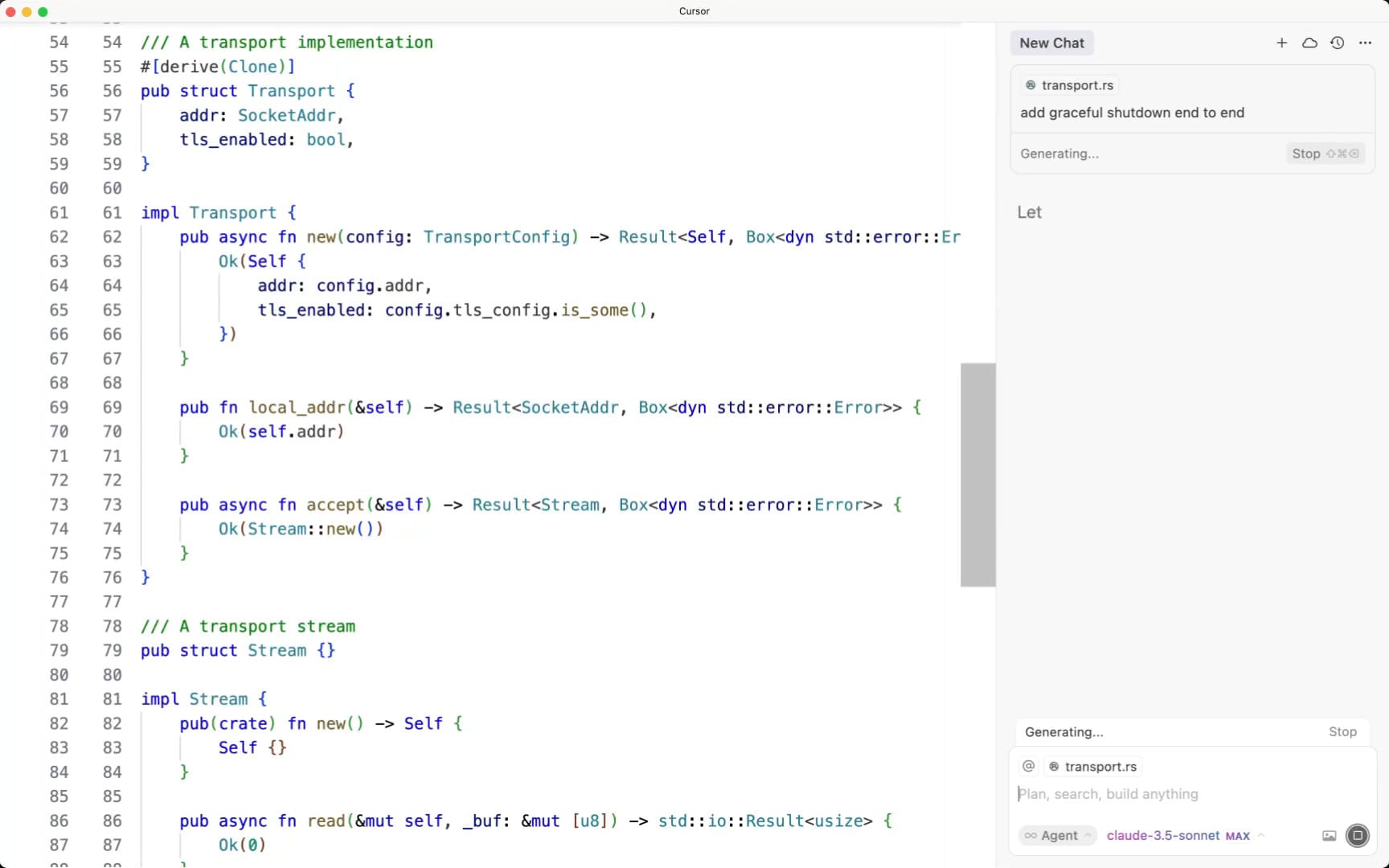

The spartan Google-like ChatGPT prompt box that has been the entry point to generative AI for millions of people, but early AI coding tools like GitHub Copilot, Cursor, and Warp chose to integrate a familiar-looking code-editing interface alongside a generative AI chat interface (usually on the right-hand side) to blend the traditional and the new elements on one screen. That's just one reason why there has been a surge of interest in those apps compared to other generative AI tools.

"In the software world, people have been doing things a certain way forever," said Zach Lloyd, founder and CEO of Warp. For the most part, that involves firing up one of those traditional code editors and actually writing out the code, but getting the most out of generative AI tools and newer coding agents requires users to start in a different place.

"The shift to being like, 'no, I'm actually not going to start by doing that, I'm going to instead start by prompting an agent to do it, and I'm just going to hop in to redirect,' that's very foreign, that's a big change in muscle memory," Lloyd said. Right now, having both elements on the screen makes it easier to understand what's happening, but several experts interviewed on this topic agreed that the balance will likely shift over time toward the agentic interface.

That's because "vibe coders" are quickly learning that whatever they put in the prompt used to set the agent on its way is incredibly important. This is a design challenge, but it's also an opportunity to craft interfaces that prompt the prompters, or encourage them to think of those prompts as an actual spec document that they would present to engineering managers and designers before any coding takes place.

The same idea can also be applied to internal generative AI applications built for sales, finance, or marketing teams. The teams building those apps need to surface the context of what the company wants and needs the app to do right in the user interface to avoid frustrating users.

"For people building in the enterprise context, you're going to want to figure out how to do this at the team and organization level, so it really mirrors the institutional knowledge that the agent needs to have in order to do a good job doing your job," Lloyd said.

Generative AI isn't just changing the way users work with business applications, it's also changing the way those applications present the output of a very basic business query such as "How did our sales in the Pacific Northwest region last quarter compare to the previous year?"

In a traditional application, finding the answer to that question would probably require the user to click on drop-down menus for the region and time period and select a format for the output. If the boss prefers a spreadsheet-like list of numbers, that's probably what everybody else in the organization will adapt to working with, but tools built around LLMs could customize the output based on what they know about the user.

There is no doubt that [chat interfaces] are the best interface for certain use cases, but I don't think everything has to be that.

"Developers and UX designers can collaborate to come up with really unique experiences that are catered towards the person who's interacting with it," Ajgaonkar said. For example, the system could understand that the business analyst who prompted the application prefers data presented a certain way based on their previously expressed preferences, but that if the prompt contains something like "this report is for the VP of Operations," the output could be tailored to that person's preferences.

But as seen in the coding apps, right now a lot of generative AI apps require the user to construct the prompt in just the right way to get the desired output. The generation that is growing up with these tools gets it, but it's far from clear to a lot of other potential users of an app.

"There is no doubt that [chat interfaces] are the best interface for certain use cases, but I don't think everything has to be that," Ajgaonkar said. Small-language models that can run locally on a user's system and were trained on corporate data could make it much easier to get the desired result without having to obsess over the exact construction of the natural-language commands, he said.

But generative AI apps pose yet another challenge and opportunity for designers and developers: security. Poorly designed apps could compromise internal corporate data that was never meant to leave company networks, and getting that balance right in the frantic early days of generative AI app development could save companies and users from a lot of problems down the road.

Margaret Price, senior director for strategy at Microsoft, likened the current state of generative AI apps to the state of email apps in the early 2000s. Those apps gave users little to no context about attachments that arrived in their inboxes, which led to the proliferation of worms and viruses that caused serious damage to corporate networks when people weren't given the tools to understand the danger.

We have so many years of examples from history of huge security problems that are actually UX issues, and we still have all of those problems today when design is not prioritized.

"Good design matters in security, because even a strong system can fail if the user experience can lead people to danger," Price said. "We have so many years of examples from history of huge security problems that are actually UX issues, and we still have all of those problems today when design is not prioritized."

This is especially top-of-mind for companies that are plunging headlong into the agentic AI era, where users are authorizing computers to take actions on their behalf without necessarily knowing the full impact or scope of those decisions.

"There's a huge responsibility for design to flag what actions they're taking and where they're taking those actions to make sure that people are provided informed consent, and that consent will shift and change over time," she said.

While it's not clear how many companies have actually moved beyond simple chat-based generative AI apps into full-on agentic AI apps, those who have adopted such tools spin a cautionary tale.

Steve Yegge of Sourcegraph, who brings a distinctively colorful voice to any discussion about the future of software development, recently characterized coding with agents as marshalling a herd of "programming brutes," who need constant attention to keep them on track.

"Somehow my agents have turned into my babies, programming-brute baby birds in a nest of console windows, squawking at me, mouths agape," he wrote. It's fair to assume that agents built to generate invoices or purchase orders might not be quite so brutish, but users managing those agents will still need clear and concise design direction from their makers to help them make the most out of these powerful but, for now, crude tools.

"A lot of the primitives that were built for people are going to work, like, okay, but you're going to be lacking a lot of the visibility that you could have" in more traditional software systems, Lloyd said. "If you're designing an agentic product that someone is going to use, you have to design upfront the permissions and security model for the agents."